See the gallery of stimuli.

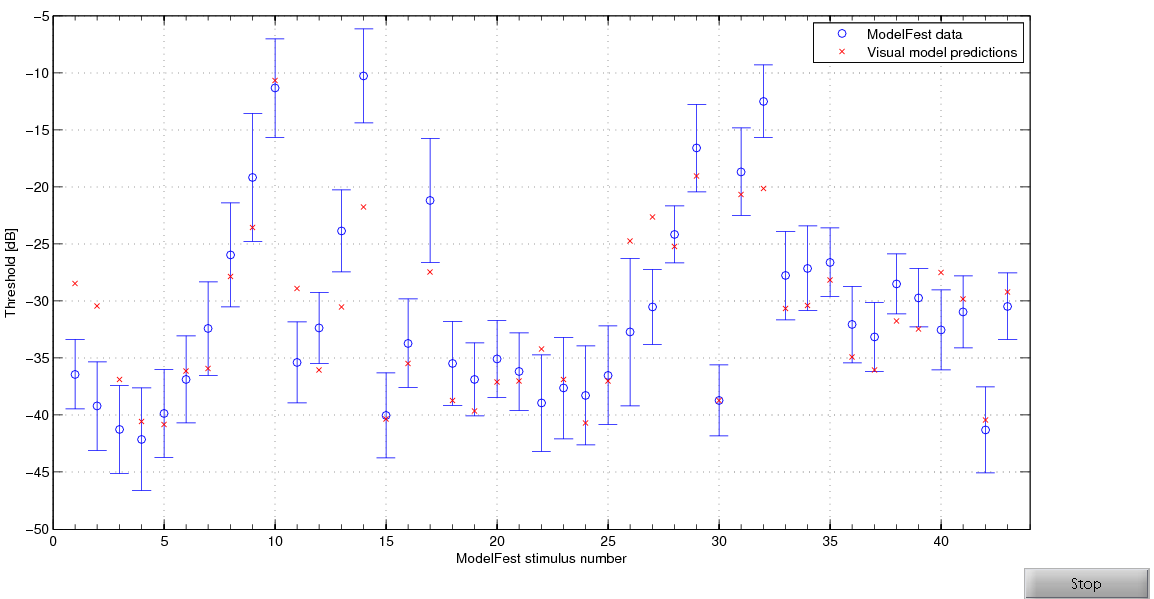

ModelFest is a standard data set created to calibrate and validate visual metrics. It contains 43 small detection targets at a uniform background of 30 cd/m2.

More about this dataset can be found in: Watson, A.B., and A.J. Ahumada Jr. "A standard model for foveal detection of spatial contrast". Journal of Vision 5, no. 9 (2005): link.

From the ModelFest website:

Since 1996, the annual meeting of the Optical Society of America has hosted a symposium entitled ModelFest. Conceived originally as a workshop in which those involved in the development of models of early human vision would demonstrate and discuss their work, it has more recently adopted as part of its program the establishment of a public, communal set of data. The purpose of the data set would be twofold: 1) to calibrate and 2) to test vision models. The data set was required to be large and varied enough to adequaltely serve both purposes. It was also hoped that the complete data set would be collected by a number of different labs, to enhance both the generality and accuracy of the data set. Thus the data set was constrained to be small enough that a large enough number of labs would volunteer. Acting through consensus, the informal group decided upon an inital effort (Phase 1) consisting of 44 two-dimensional monochromatic patterns confined to an area of approximately two by two degrees.

This is the original Visual Difference Predictor based on corresponence with the author and the book chaper: Daly, S. "The Visible Differences Predictor: An Algorithm for the Assessment of Image Fidelity." In Digital Images and Human Vision, edited by Andrew B. Watson, 179-206. MIT Press, 1993.

The metric uses the default parameters from the paper except the masking slope, set to 0.9 for all bands, which was found from fitting the metric to the masking data sets. One optional component in the VDP is computing contrast in the cortex filtered images, which could be either global or local. This version uses global contrast.

The metric also includes an improved variation of the phase uncertainty, as described in: Lukin, A. "Improved Visible Differences Predictor Using a Complex Cortex Transform." In International Conference on Computer Graphics and Vision (GraphiCon), 2009. Phase uncertainty is not mentioned in the '93 book chapter, but is described in the patent application. The method proposed by Lukin achieves the same goals as phase uncertainty but is more elegant and efficient than the approach described in the patent.